Multi-Layer Density of Experts: Deepen AI Memory

We keep mistaking scale for understanding.

Every year, the models get larger, the benchmarks get higher, and yet — the conversations feel thinner.

We’re training machines to remember more, not mean more.

The next frontier isn’t trillion-parameter giants is CoD on steriods:

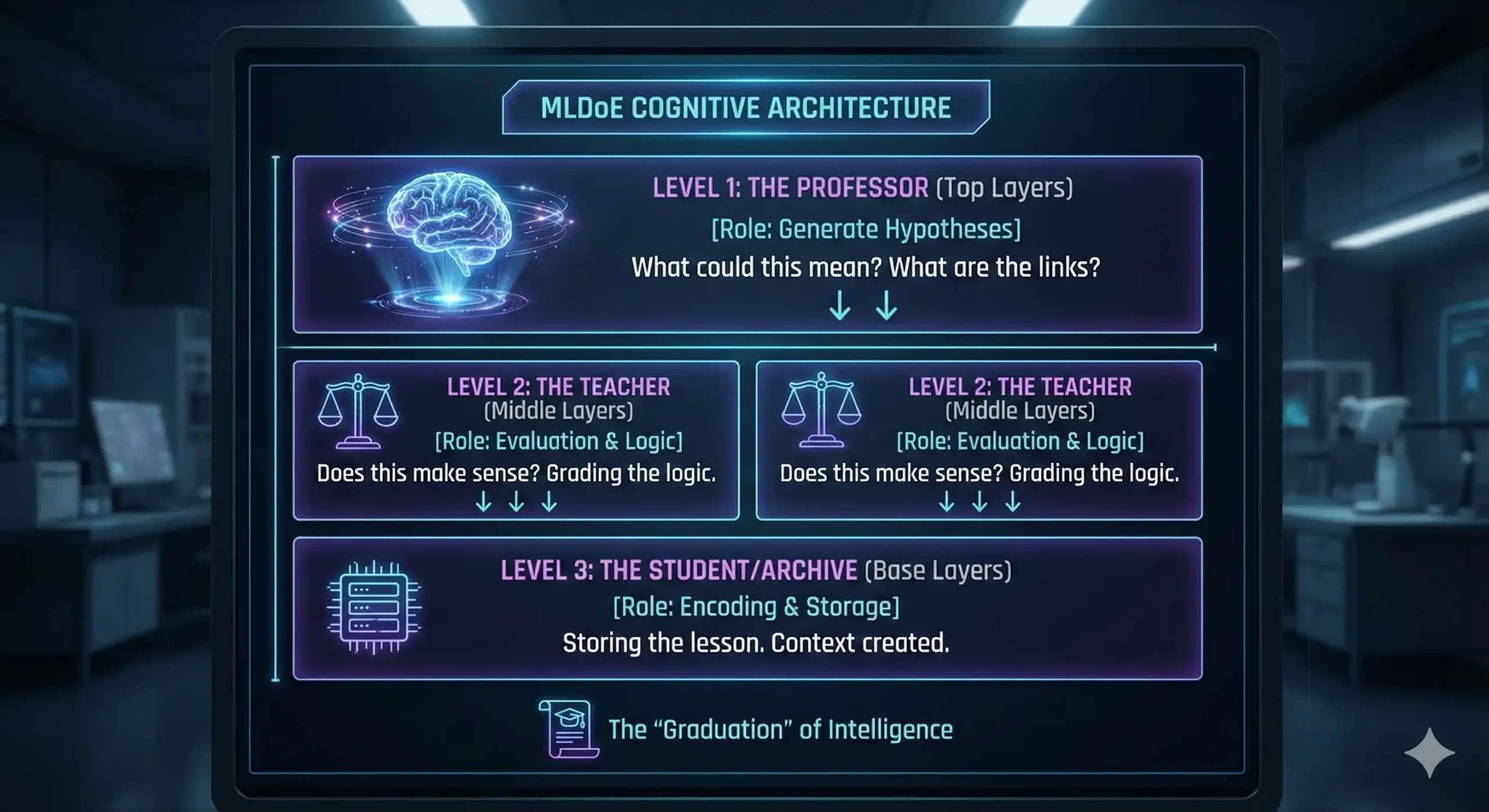

It’s Multi-Layer Density of Experts (MLDoE) — a cognitive architecture that distributes intelligence vertically, not horizontally.

Here’s the shift:

Mixture-of-Experts (MoE) was about specialization. Route tasks to the right neuron crowd.

MLDoE goes further — it builds an educational hierarchy within the model. Layers of experts don’t just perform; they teach.

![A conceptual diagram illustrating a workflow from left to right. On the left, a blue box labeled "INPUT" contains "[ RAW DATA ]". An arrow points to the center "PROCESS" section, featuring a complex, glowing 3D cube labeled "[ MLDoE ]" adorned with gears and circuitry. Below the cube are three buttons labeled "Learn," "Teach," and "Store." An arrow leads to the right "OUTPUT" section, where a glowing golden box contains "[ WISDOM ]".](https://lawngreen-mallard-558077.hostingersite.com/wp-content/uploads/2025/12/The-MLDoE-Transformation-Engine-From-Raw-Data-to-Wisdom-1024x559.png)

Each layer functions like a Department of Education — curating, compressing, and transferring semantic density downward. The top layers generate hypotheses. The middle layers evaluate them. The bottom layers encode them into reusable understanding.

This is not just computation. It’s pedagogy.

Instead of raw token memorization, this Multi-layer introduces cognitive scaffolding — teaching the model how to self-grade and self-summarize without losing context.

In practice, that means:

- Memory systems that get sharper with use, not slower.

- Reasoning loops that build understanding, not repetition.

- Context compression that becomes context creation.

When tested across 11 LLM families, MLDoE [Context Extension Protocol Fork] outperformed traditional memory and summarization protocols by over 10× in information recall fidelity.

It didn’t just remember — it reorganized knowledge.

The result? Models that behave less like giant libraries and more like living universities.

Every expert isn’t a static model head — it’s a faculty, learning how to teach the others how to think.

A structure that evolves like the human mind: dense, recursive, interconnected.

The future of intelligence won’t come from GPUs alone.

It’ll come from better curricula inside the machine.

From architectures that teach themselves how to learn.

Because maybe we’ve been building AIs like factories.

But what we need are schools — where knowledge doesn’t just pass through layers, it graduates.

COLLABORATE, don’t just evaluate… or listen 18 months from now.